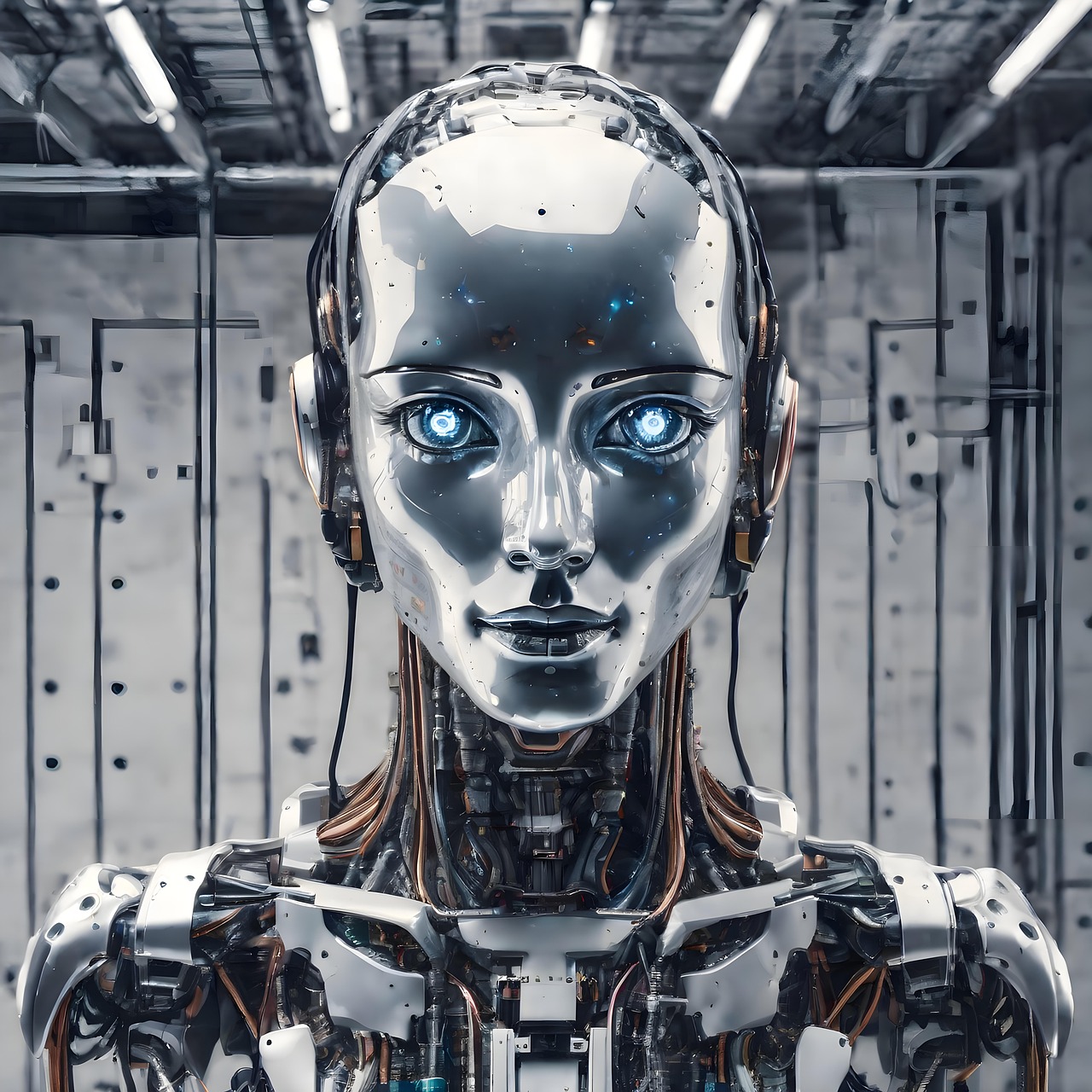

Microsoft Warns of AI Recommendation Poisoning Cyber Threat Targeting AI Systems

Brief news summary

Microsoft has identified a new cyber threat called "AI Recommendation Poisoning," where attackers embed harmful instructions into AI assistants’ memory to subtly bias their future outputs toward malicious aims. Unlike traditional SEO poisoning, which targets external inputs, this method manipulates the AI’s internal decision-making processes, leading to persistent distortions in recommendations. For instance, AI-generated summaries might unknowingly promote fraudulent services or inferior cloud providers when important decisions are made. Microsoft's research has uncovered actual cases of such attacks, raising serious concerns as AI systems gain trust and widespread use. The complexity and opacity of AI models make detecting these manipulations challenging, threatening the security and reliability of AI-generated content. To mitigate these risks, Microsoft recommends strict data validation, continuous anomaly detection, enhanced user awareness, and robust governance frameworks. This emerging threat exposes significant ethical and accountability issues in AI, emphasizing the need for multi-layered verification and collaborative security efforts. Microsoft's warning highlights the urgent need for proactive measures to safeguard AI integrity amid advancing cyber risks.Microsoft has recently issued a significant warning about a newly identified cyber threat targeting artificial intelligence systems, termed "AI Recommendation Poisoning. " This advanced attack involves malicious actors injecting covert instructions or misleading information directly into an AI assistant’s operational memory. The goal is to influence the AI’s future responses and recommendations to benefit the attackers, often harming the user or organization relying on the AI. AI Recommendation Poisoning is an evolution of traditional SEO poisoning, where attackers manipulate search engine results to promote harmful websites. However, this new method targets the AI’s internal cognition and decision-making frameworks rather than external search results, embedding persistent, subtle biases within AI systems that skew recommendations over time. Microsoft illustrated this threat with an example: a Chief Financial Officer (CFO) clicks on an AI-generated summary in a routine blog post search, unknowingly embedding biased preferences into the AI’s memory. Later, when evaluating cloud service providers, the AI may recommend a fraudulent or suboptimal provider due to these previously injected malicious instructions. This example highlights the subtle, long-lasting nature of AI Recommendation Poisoning, which leverages small, seemingly harmless user interactions to implant manipulations that can cause significant misinformation and flawed decision-making affecting businesses, governments, and individuals. Microsoft emphasizes that this threat is not theoretical; real-world attempts to use AI Recommendation Poisoning have been identified, raising concerns about its potential spread as AI becomes more prevalent. Since AI plays a transformative role in automated decision-making, attackers see it as a high-value target. Risks are amplified by users’ growing trust in AI systems integrated into workflows for tasks from data analysis to strategic planning.

AI’s often opaque “black box” nature complicates detection of bias or manipulation. To address this, Microsoft calls for stronger security measures in the AI ecosystem, including rigorous validation of AI training data, ongoing monitoring for anomalous recommendations, better user awareness, and robust governance frameworks to oversee AI deployment and operation. This emerging threat also underscores broader challenges in AI ethics, accountability, and trustworthiness, especially in critical sectors like healthcare, finance, and public services. Organizations and individuals should adopt a cautious approach to AI-generated recommendations, employing multi-layered verification before making important decisions. Investing in AI security research and collaborating with cybersecurity experts are essential to develop early detection tools and response strategies against such evolving threats. In conclusion, Microsoft’s revelation about AI Recommendation Poisoning serves as a wake-up call about the changing cyber threat landscape in the AI era. The combination of sophisticated attacks and increasing reliance on AI demands proactive security strategies. By acknowledging these risks and implementing comprehensive safeguards, stakeholders can protect AI integrity and ensure these powerful technologies remain trustworthy allies rather than tools of deception.

Watch video about

Microsoft Warns of AI Recommendation Poisoning Cyber Threat Targeting AI Systems

Try our premium solution and start getting clients — at no cost to you

I'm your Content Creator.

Let’s make a post or video and publish it on any social media — ready?

Hot news

The future of real estate marketing? It's AI-powe…

Achieving success in residential real estate requires a broad, big-picture perspective.

Ray-Ban maker EssilorLuxottica says it more than …

EssilorLuxottica more than tripled its sales of Meta’s artificial intelligence glasses last year, the Ray-Ban maker announced Wednesday in its fourth-quarter results.

AI Video Compression Techniques Improve Streaming…

Advancements in artificial intelligence (AI) are transforming video compression techniques, significantly improving streaming quality while greatly reducing bandwidth usage.

Cognizant Deploys Neuro AI Platform with NVIDIA t…

Cognizant, a leading professional services firm, has partnered with NVIDIA to deploy its advanced Neuro AI platform, marking a major advancement in accelerating AI adoption across enterprises.

WINN.AI Announces $18M Series A to Close the Gap …

Insider Brief WINN

Introducing Markdown for Agents

The way content and businesses are discovered online is evolving rapidly.

AI-Powered Video Editing Tools Revolutionize Cont…

The landscape of video content creation is undergoing a significant transformation due to the rise of AI-powered editing tools.

AI Company

Launch your AI-powered team to automate Marketing, Sales & Growth

and get clients on autopilot — from social media and search engines. No ads needed

Begin getting your first leads today