None

Silicon Valley's past motto of "Move fast and break things" continues to haunt the tech industry, as demonstrated by the recent clash between actor Scarlett Johansson and OpenAI. Johansson claimed that her voice was used for OpenAI's new product without her consent, highlighting concerns in the creative industries about being replaced by artificial intelligence (AI). This incident calls attention to the responsibility and boundaries that AI companies like OpenAI must adhere to. While the AI giants have pledged to create responsible and safe products, the focus on worst-case scenarios rather than practical threats has shifted. The immediate risk of AI tools replacing jobs and discriminating against individuals has been overlooked. Questions arise about the safety testing of AI products such as OpenAI's GPT-4o and Google's Project Astra, as well as the lack of understanding regarding why AI tools generate specific outputs.

Although voluntary agreements have been made, some argue that legally binding rules are necessary to ensure responsible development of AI technologies. Without official oversight, there is uncertainty surrounding companies' adherence to their pledges. The EU's AI Act provides strict legislation and penalties for non-compliance, but it also creates additional work for AI users. Establishing global governance principles that protect all nations is a challenging task. The regulation and policy-making process lags behind the pace of innovation, leaving the question of whether tech giants will be willing to wait for regulatory frameworks to catch up.

Brief news summary

The recent dispute between Scarlett Johansson and OpenAI has raised concerns about the potential dangers of AI to creative industries. Key issues highlighted in this conflict include job displacement and the prioritization of innovation over ethics by tech giants. OpenAI, despite being a non-profit organization, has faced criticism for its profit-driven approach, raising doubts about its commitment to responsible AI practices. To manage the development of AI, it is crucial to establish clear boundaries and regulations. However, concerns have been raised regarding the lack of attention to problems such as job displacement and algorithmic bias. There is a need for independent oversight and guidelines promoting transparency and safety. Critics argue that voluntary agreements are insufficient and advocate for legally binding rules to foster responsible AI development. The European Union's AI Act is viewed as a significant step, although its impact on AI developers may be underestimated. The challenge lies in establishing global governance principles that extend beyond Western countries and China. Promising discussions have taken place at events like the AI Seoul Summit, but regulatory efforts still lag behind technological advancements. While government initiatives show promise, it remains uncertain whether tech giants will take proactive action before regulations are put in place.

AI-powered Lead Generation in Social Media

and Search Engines

Let AI take control and automatically generate leads for you!

I'm your Content Manager, ready to handle your first test assignment

Learn how AI can help your business.

Let’s talk!

Hot news

AI in Retail: Personalizing Customer Experiences

Artificial intelligence (AI) is profoundly transforming the retail industry, ushering in a new era of personalized shopping experiences tailored to the unique preferences and behaviors of individual consumers.

Circle's Valuation and Regulatory Developments in…

The cryptocurrency industry is undergoing significant transformation as key players and regulatory environments evolve, signaling a new era for digital assets worldwide.

Robinhood (HOOD) News: Launches Tokenized Stocks …

Robinhood Expands Its Crypto Presence by Introducing Its Own Blockchain and Tokenized Stocks Tokenized versions of U

European CEOs Urge Brussels to Halt Landmark AI A…

A group of leading CEOs recently sent an open letter to European Commission President Ursula von der Leyen, expressing serious concerns about the current state of the proposed EU Artificial Intelligence Act.

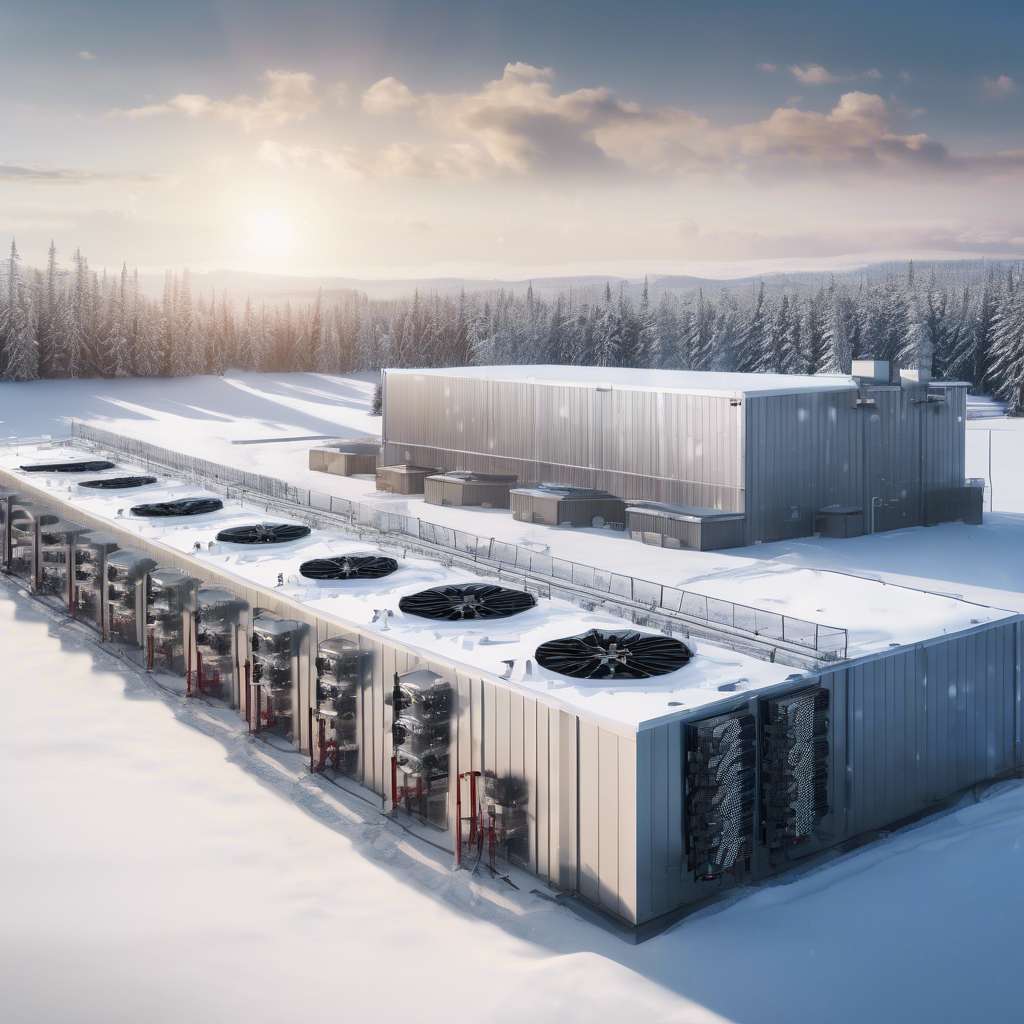

DMG Blockchain Reports 26% Bitcoin Mining Decline…

VANCOUVER, British Columbia, July 2, 2025 (GLOBE NEWSWIRE) – DMG Blockchain Solutions Inc.

Microsoft's AI Outperforms Doctors in Diagnosing …

Microsoft has achieved a major breakthrough in applying artificial intelligence to healthcare with its AI-driven diagnostic tool, the AI Diagnostic Orchestrator (MAI-DxO).

Rise of AI Companions Among Single Virginians

New data from Match reveals that 18% of single Virginians have incorporated artificial intelligence (AI) into their romantic lives, a significant increase from 6% the previous year.

Auto-Filling SEO Website as a Gift

Auto-Filling SEO Website as a Gift

Auto-Filling SEO Website as a Gift

Auto-Filling SEO Website as a Gift